Published 2026-01-19

So your microservices are talking, things are humming along, and then… bam. One little service decides to take a nap, or throws a fit, and suddenly everything starts toppling over. It’s like watching a carefully built tower of dominoes get nudged. One falls, and the whole chain reaction begins. Ever felt that frustration? That moment when a single point of failure quietly drags the entire system down?

It’s not about blame. Services, like any complex machinery, can have their off days—network hiccups, unexpected load spikes, a third-party API going silent. But must a single stutter bring everything to its knees? The old way of hoping everything stays perfect? That’s like running delicate gears without any safety latch. Sooner or later, something grinds to a halt.

Enter a rather elegant idea: the circuit breaker. Picture it not as some complex abstract concept, but as a simple, intelligent switch in your system’s wiring. Borrowed from electrical engineering, its logic is beautifully straightforward. When a particular service call starts failing repeatedly, this “breaker” trips. It stops sending requests to that troubled service for a while, giving it space to recover, while redirecting traffic or returning a predefined fallback response. No more cascading failures. No more waiting indefinitely for a timeout that never comes. The system protects itself.

But how does this translate from idea to real, breathing code in a microservices landscape? It’s about pattern, not just a tool. Implementing a circuit breaker means adding a layer of resilience that watches, learns, and acts. It monitors for failures—say, five timeouts in a row—and then flips the state from “closed” (normal operation) to “open” (stop the flow). After a cooldown period, it might cautiously try again, peeking to see if the service is back, a state often called “half-open.” It’s resilience designed right into the conversation between your services.

Why bother, you might ask? Well, think about what it brings. First, isolation. A failure is contained. One faulty service won’t drain your thread pool or bog down your entire application. Second, graceful degradation. Instead of a full-blown error page, users might see a slightly older cached version of data, or a friendly “feature temporarily simplified” message. The experience stays functional. And third, automatic recovery. The system self-heals. When the troubled service comes back online, the circuit breaker detects it and gradually restores normal traffic. It’s like having a diligent, silent guardian built into your architecture.

Now, you might wonder, isn’t this just extra complexity? Not when it’s done thoughtfully. The key is in the tuning: setting the right failure threshold, the cooldown duration, and useful fallback logic. Done well, it becomes a natural part of the system’s rhythm—something that works so seamlessly you almost forget it’s there, until the day it saves you from a major outage.

Speaking of thoughtful implementation, this is where the philosophy behind a solution matters. It’s not about slapping on a library and calling it a day. It’s about understanding that resilience is a core feature, not an afterthought. In the world of distributed systems, things will fail. The goal is to fail smart, to design systems that bend instead of break.

Consider a simple analogy. Imagine a team of relay runners. If one runner stumbles, the old way would force the next runner to wait indefinitely for the baton, freezing the whole race. With a circuit breaker pattern, the team has a plan. After a few missed handoffs, the next runner proceeds with a backup baton, keeping the race moving while the recovering runner gets back in lane. The race goes on.

So, what does embracing this pattern feel like in practice? It brings a certain peace of mind. Development teams can sleep a bit better, knowing their architecture has a built-in buffer against the unexpected. System performance charts stop looking like heart attack readings during partial outages. Users experience consistency, even when parts of the backend are having a rough day.

It’s a shift from a fragile, interconnected web to a resilient mesh. Each service becomes a bit more self-sufficient, a bit more responsible for its own boundaries. Communication becomes robust, not just efficient.

Of course, no pattern is a magic bullet. It requires understanding your specific failure modes. What constitutes a “failure” for your service? A timeout? A specific HTTP 500 error? How long should the circuit stay open? The answers depend on your unique context—your traffic patterns, your SLAs, your user expectations.

This journey toward resilience often starts with a question: What’s the cost of not having a safety net? For many, the answer becomes clear after that first major cascade failure. The cleanup, the frantic debugging, the user complaints—it all adds up. Investing in patterns like the circuit breaker is, in many ways, investing in stability and predictability.

In the end, building with microservices is about embracing distributed complexity while managing risk. The circuit breaker pattern is one of those essential tools in the toolbox—a simple, proven concept that helps your system handle the real world, where things rarely go perfectly. It’s about designing for reality, not just for the ideal. And in that reality, a little intelligent switching can make all the difference, keeping your digital machinery running smoothly, even when a few gears momentarily slip.

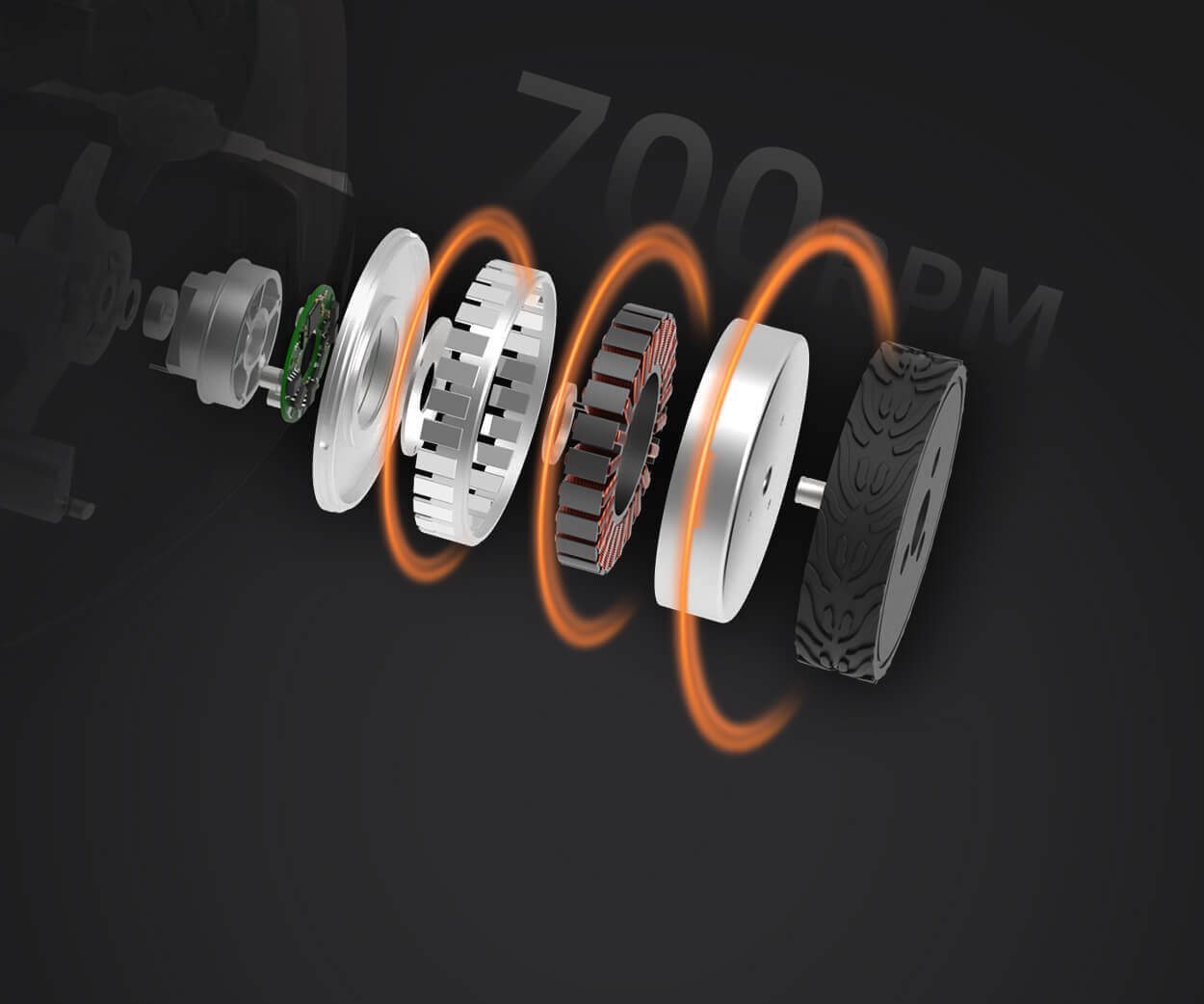

Established in 2005,kpowerhas been dedicated to a professional compact motion unit manufacturer, headquartered in Dongguan, Guangdong Province, China. Leveraging innovations in modular drive technology,kpowerintegrates high-performance motors, precision reducers, and multi-protocol control systems to provide efficient and customized smart drive system solutions.kpowerhas delivered professional drive system solutions to over 500 enterprise clients globally with products covering various fields such as Smart Home Systems, Automatic Electronics, Robotics, Precision Agriculture, Drones, and Industrial Automation.

Update Time:2026-01-19

Contact Kpower's product specialist to recommend suitable motor or gearbox for your product.